Top 4 effective techniques for web scraping in 2024

A complete guide 📃 on web scraping, its types and the processes involved.

Web scraping is also referred to as data extraction, data mining, data crawling and web crawling. The easiest explanation of the term “Web Scraping” would be:

The process of extracting useful data from website(s), public or private (access by login and password) to process or store it in an organised form.

Type of sites

- Static:

The site where the content doesn’t change on refresh, or simply every user of that site will see the same content every time.

For example Wikipedia, blogs, documentation, news articles page. - Dynamic:

Where the data on the site changes depending upon the situations, triggers, geolocation, device, search history, time or some events.

For example stock market, product page, a Facebook news feed and Google search results.

Is Web Scraping legal ?

There is always a grey area to that answer. The data available on the public site is legal to use as long as you are working with it ethically.

Most of the sites available online have a file named /robots.txt in their root directory. That serves as a guideline for the scraping bots, which routes/ endpoint can be accessed and which is restricted for bots.

Some of the examples are :

- https://www.google.com/robots.txt

- https://en.wikipedia.org/robots.txt

- https://twitter.com/robots.txt

- https://finance.yahoo.com/robots.txt

A sample /robots.txt like this:

User-agent: *

Sitemap: https://finance.yahoo.com/sitemap_en-us_desktop_index.xml

Sitemap: https://finance.yahoo.com/sitemaps/finance-sitemap_index_US_en-US.xml.gz

Sitemap: https://finance.yahoo.com/sitemaps/finance-sitemap_googlenewsindex_US_en-US.xml.gz

Disallow: /r/

Disallow: /_finance_doubledown/

Disallow: /nel_ms/

Disallow: /caas/

Disallow: /__rapidworker-1.2.js

Disallow: /__blank

Disallow: /_td_api

Disallow: /_remote

User-agent:googlebot

Disallow: /m/

Disallow: /screener/insider/

User-agent:googlebot-news

Disallow: /m/Methods of Web Scraping.

Depending upon the nature of data required our method can vary. We may need only a one-time solution, or we may want to extract the data daily, weekly, monthly, or yearly. Sometimes sites have a login protection firewall or custom rate limiting to access the site or maybe Google ReCaptcha or other bot detection firewall may be applied. In some cases, we may require IP rotations and proxies.

So! that’s clear, a single method can’t be a good fit for all of the problems.

⁃ Chrome Browser Extensions. (Only Free!)

- Instant Data Scraper (Mostly used for extracting multiple records to CSV File)

- Email Extractor (Best for extracting all emails)

- Web Scraper (A no-code free solution, but requires a learning path)

⁃ Google Sheet (with sample sheet)

There are some of the useful Google Sheet functions like:

IMPORTHTMLExtract data from public webpages available like Wikipedia, Worldometers etc.IMPORTDATAExtract the data from.CSVor.TSVfiles link available online.IMPORTXMLSimilar toIMPORTDATA, this function is used to load.XMLdata files on the Google SpreadSheet.

Example

We would want to test out fetching data from a table of this link. Using the function IMPORTHTML

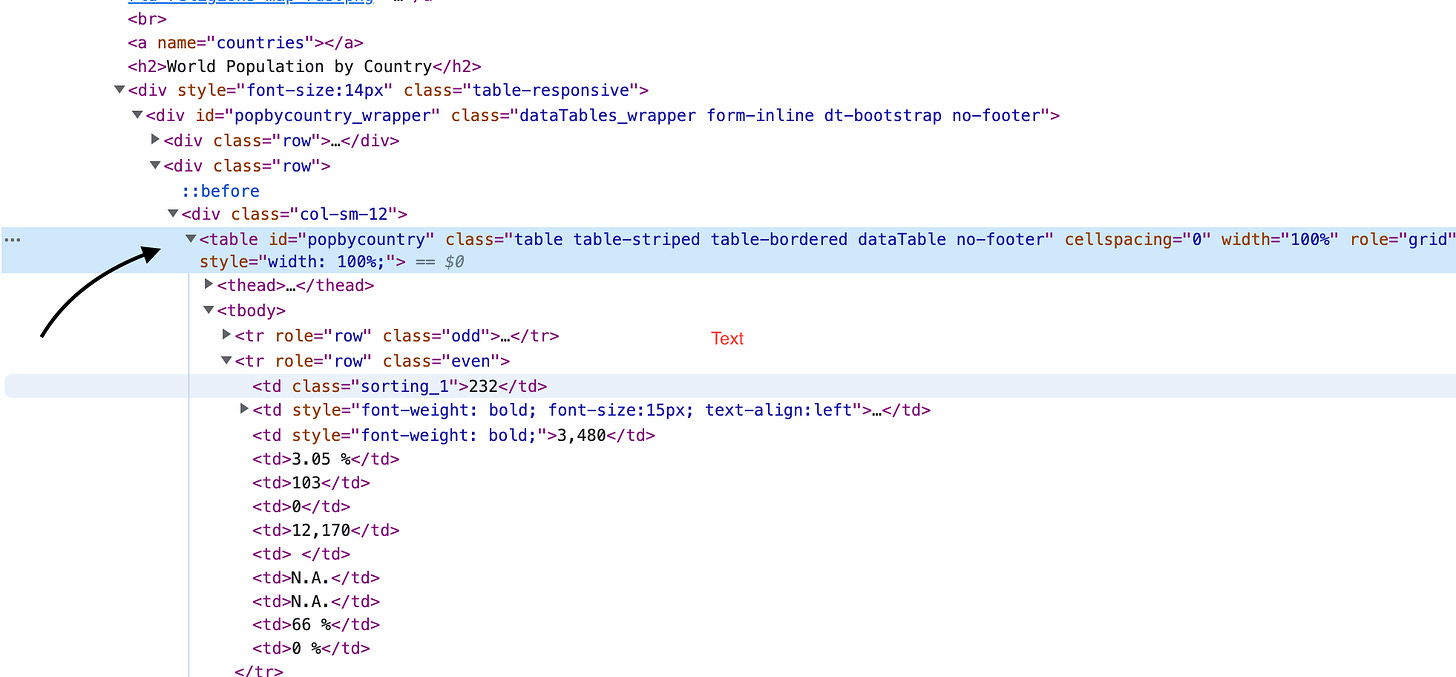

The site’s source code show’s us that it’s a <table> tag which we wanted to fetch.

This is the function that will do the magic ✨.

IMPORTHTML("https://www.worldometers.info/world-population", "table")The Sample GoogleSheet can also be accessed by using the link below, with the formula to fetch the data in the A1 cell of the sheets.

- Python requests, with Beautiful soup

Talking about self-built / custom developed solutions. These 2 python libraries are generally used together to mine the data from the site.

- Requests is used to send an HTTP request to the site, also capable of managing sessions, cookies, authentications, custom headers and adding a proxy layer for IP rotation. Either the response is

HTMLorJSONrequests can manage them. - Beautiful soup on the other hand is used to parse that HTML content from the HTTP response and convert it into a manageable data format for python to process.

The code sample will look like this.👨🏻💻

import requests

from bs4 import BeautifulSoup

response=requests.get("https://www.google.com/")

parser=BeautifulSoup(response.content, 'html.parser')

print(parser.title)

Scrapy is the most advanced open-source web scraping framework that includes all of the required libraries included in its request to mine any data from the site. This is what we call a framework with “batteries included”. It’s fast, open-source, actively maintained and has lots of integration available.

Scrapy Engine follows a pre-defined structured way to manage the requests, scraping workers, processing data and managing and storing it into any form of flat file storage like CSV, Excel or on in a structured database.

Scrapy is mostly recommended for mid and large enterprise-scale projects that require processing a larger amount of data with higher reliability.

Conclusion

Although it is also, possible to use free chrome plugins available when you need quick mining of data into a spreadsheet when it comes to customisation, doing it at scale. You must require to switch to a custom build solution for better reliability, efficiency and time-saving.